This article is last updated on 2023-08-25 09:30, it might be inaccurate.

Artificial intelligence (AI) image generators are rapidly advancing, and it is becoming increasingly difficult for even specialized AI tools to distinguish between real and fake!

In January, OpenAI’s AI text-to-image generator DALL-E were only able to render human-like images with minor flaws.

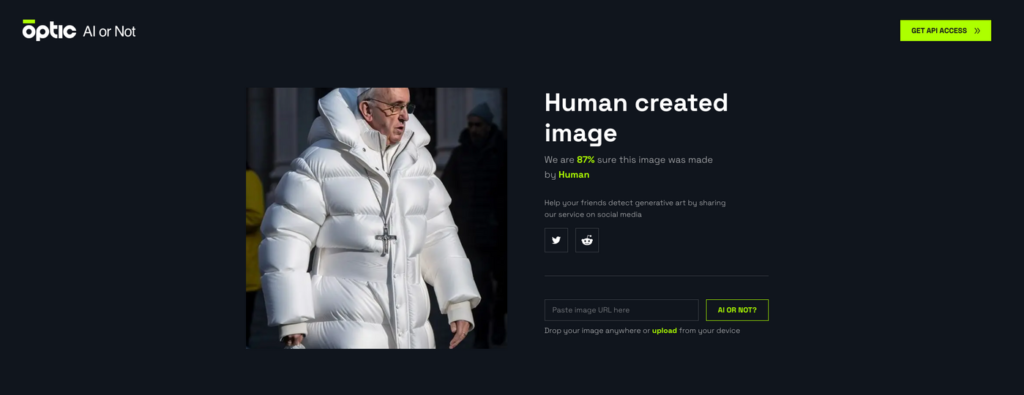

However, by March, some AI-generated images were so realistic that they could fool many people. For example, pictures of Donald Trump being arrested, Pope Francis wearing a white Puffer jacket, and French President Macron walking through a protest crowd.

Developers are working on tools to detect AI-generated images by analyzing them, but the problem is that these tools may also be fooled if they are not in sync with the image generators they are monitoring.

For example, Optic, an AI trust and security company, was with 87% confidence that the image of the Pope wearing a Puffer jacket was taken by a human, and not AI generated.

Without someone pointing it out, it can be difficult to tell that these are fake images. While AI-generated content may be interesting, it carries risks for everyday interactions, and can be used to spread false information.

Perhaps it is time to halt the AI development temporarily.